Automating Everyday Tasks- Form Filling, Web Scraping, And Testing With Python

Imagine a world where your daily tasks — from filling out repetitive forms to scraping data from the web or running complex tests — are completed at the click of a button. Sounds futuristic, right? Well, with Python, this is not just possible; it's incredibly accessible. Whether you're a programming geek, a budding developer, or someone curious about technology, this guide will walk you through how Python can transform your daily workflows.

Python is a powerhouse for task automation, offering tools and libraries that are easy to learn and implement. In this blog, we'll explore Python form filling automation, web scraping with Python scripts, and Python automated testing. Let’s dive deep into how Python can help you simplify workflows, save time, and make life easier.

Why Python for Automation?

Before we dive into the nitty-gritty, let’s understand why Python is the go-to language for task automation:

- Ease of Learning: Python's syntax is simple and readable, making it perfect for both beginners and experts.

- Extensive Libraries: Libraries like Selenium, Beautiful Soup, and PyTest offer ready-to-use tools for automating various tasks.

- Community Support: A vibrant community of programming geeks ensures there’s always help available online.

- Versatility: Whether it's browser automation, data extraction, or testing, Python can handle it all.

Section 1: Form Filling with Python

The Problem: Filling out online forms manually is tedious and prone to errors, especially when handling multiple entries.

The Solution: Python's Selenium library can automate this process. Selenium is a browser automation tool that interacts with web pages just like a human user.

How to Set Up Selenium for Form Filling:

-

Install Selenium:

pip install selenium -

Download a WebDriver: Choose a driver compatible with your browser (e.g., ChromeDriver for Google Chrome).

-

Write Your Script: Here’s a basic example of a form-filling script:

from selenium import webdriver from selenium.webdriver.common.by import By from selenium.webdriver.common.keys import Keys # Initialize WebDriver driver = webdriver.Chrome() # Open the Form Page driver.get("https://example.com/form") # Fill Out the Form driver.find_element(By.NAME, "username").send_keys("JohnDoe") driver.find_element(By.NAME, "email").send_keys("johndoe@example.com") driver.find_element(By.NAME, "password").send_keys("securepassword123") driver.find_element(By.NAME, "submit").click() # Close the Browser driver.quit()

Advanced Use Cases:

- Use libraries like PyAutoGUI for desktop form filling.

- Integrate with APIs for dynamic data input.

Keywords: Python form filling automation, form filling scripts Python, form automation tools Python.

Section 2: Web Scraping with Python

The Problem: Manually collecting data from websites is time-consuming and inefficient.

The Solution: Python's libraries like Beautiful Soup and Scrapy allow you to scrape and parse data from web pages easily.

Tools for Web Scraping:

- Beautiful Soup: Ideal for small-scale projects.

- Scrapy: Perfect for larger, scalable scraping projects.

- Selenium: Useful for scraping dynamic content.

Beautiful Soup in Action:

Here’s a quick example of scraping titles from a blog:

from bs4 import BeautifulSoup

import requests

# Fetch the Web Page

url = "https://example-blog.com"

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

# Extract Data

titles = soup.find_all('h2')

for title in titles:

print(title.text)

Scrapy for Advanced Scraping:

Scrapy allows you to build a complete web crawler. Here’s an example command to start a Scrapy project:

scrapy startproject myproject

Best Practices:

- Respect robots.txt and website terms of service.

- Use proxies to avoid getting blocked.

Keywords: web scraping with Python scripts, web scraping Python tutorial, Python for web scraping and testing.

For more in-depth knowledge, visit Scrapy's documentation.

Section 3: Automated Testing with Python

The Problem: Testing software manually is repetitive and prone to human error.

The Solution: Automated testing ensures your code or application is bug-free and performs optimally. Python offers powerful tools like PyTest, unittest, and Selenium for testing.

Setting Up PyTest for Testing:

-

Install PyTest:

pip install pytest -

Write a Test Case:

def test_sum(): assert sum([1, 2, 3]) == 6, "Should be 6" -

Run the Test:

pytest test_script.py

Selenium for End-to-End Testing:

Automate UI testing with Selenium:

from selenium import webdriver

driver = webdriver.Chrome()

driver.get("https://example.com")

assert "Example Domain" in driver.title

driver.quit()

Continuous Integration:

Integrate automated tests into CI/CD pipelines using tools like Jenkins or GitHub Actions.

Keywords: automated testing Python examples, Python testing and web scraping, Python scripts for browser automation.

Learn more about automated testing with PyTest's documentation.

Section 4: Integrating Automation into Workflows

Automation becomes exceptionally powerful when individual tasks like form filling, web scraping, and testing are combined into cohesive workflows. Instead of performing these tasks in isolation, you can link them together to create end-to-end solutions. This integration saves time, eliminates human error, and ensures efficiency, making Python an indispensable tool for automating workflows.

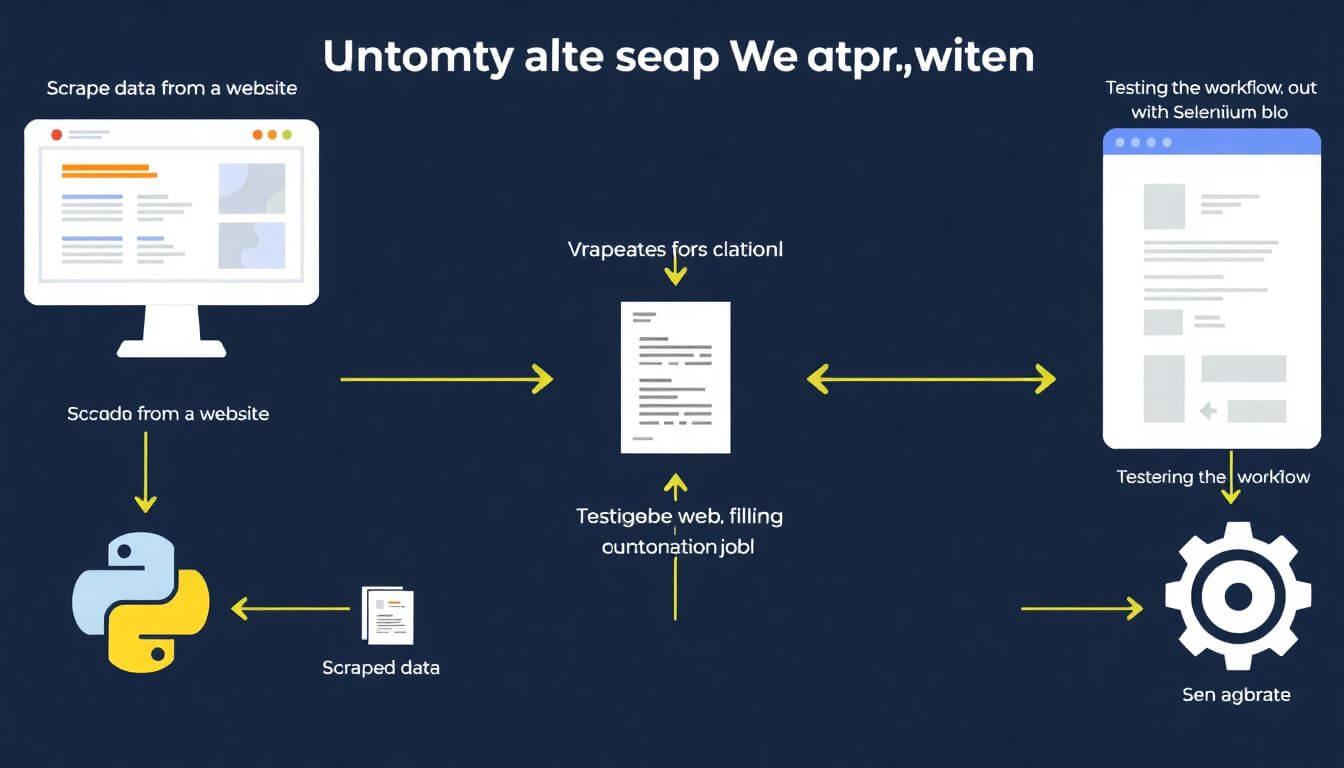

Combining Form Filling, Web Scraping, and Testing

Let’s break down how you can combine these tasks to create a seamless workflow:

1. Scrape Data from a Website

The first step is to gather the data you need for your process. For example, imagine you’re automating a job application system where you need to extract job postings from multiple websites. Python's web scraping tools like Beautiful Soup, Scrapy, or Selenium can help.

Here’s a basic outline:

- Use Selenium if the website has dynamic content (e.g., uses JavaScript to load).

- Use Beautiful Soup for static HTML content.

Example: Scraping Job Data

from bs4 import BeautifulSoup

import requests

# Fetch a webpage with job listings

url = "https://example.com/jobs"

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

# Extract job details

jobs = []

for job in soup.find_all('div', class_='job-listing'):

title = job.find('h2').text

location = job.find('span', class_='location').text

jobs.append({'title': title, 'location': location})

print(jobs)

This data can then be structured in a format like JSON or CSV for further use.

2. Use the Data to Populate Forms

Once you have the scraped data, the next step is to automatically fill forms using the extracted information. For instance, if the job data contains fields like the position, location, and application URL, you can use Python’s Selenium library to input these details into forms.

Example: Automating Form Filling with Scraped Data

from selenium import webdriver

from selenium.webdriver.common.by import By

# Initialize WebDriver

driver = webdriver.Chrome()

# Open the job application page

driver.get("https://example.com/apply")

# Fill out the form with scraped data

scraped_data = {'name': 'John Doe', 'email': 'john@example.com', 'job_title': 'Software Engineer'}

driver.find_element(By.NAME, 'name').send_keys(scraped_data['name'])

driver.find_element(By.NAME, 'email').send_keys(scraped_data['email'])

driver.find_element(By.NAME, 'job_title').send_keys(scraped_data['job_title'])

driver.find_element(By.NAME, 'submit').click()

# Close the browser

driver.quit()

By combining web scraping and form filling, you’ve already automated two major steps in the workflow.

3. Test the Workflow to Ensure Accuracy

After automating the scraping and form-filling processes, it’s essential to validate the workflow to ensure it works as expected. Python’s PyTest or unittest libraries can be used to create test cases for this purpose.

Example: Testing Form Submission

def test_form_submission():

# Simulate the form submission process

form_data = {'name': 'John Doe', 'email': 'john@example.com', 'job_title': 'Software Engineer'}

submitted_data = simulate_form_submission(form_data)

# Validate the result

assert submitted_data['status'] == 'success', "Form submission failed!"

assert submitted_data['name'] == 'John Doe', "Name mismatch in submission!"

assert submitted_data['email'] == 'john@example.com', "Email mismatch in submission!"

These tests ensure that:

- Scraped data is accurately input into the forms.

- The forms are submitted without errors.

- The entire workflow operates as intended.

Task Automation Tools

For integrating such workflows, you have two main options: no-code tools or custom Python scripts.

1. Zapier and Integromat

If coding is not your forte or you need a quick setup, tools like Zapier and Integromat (now Make) are great for no-code automation:

- Zapier: Connects apps like Google Sheets, Gmail, and webhooks to automate tasks.

- Integromat: Offers more advanced workflows with visual diagramming.

Example Workflow Using Zapier:

- Scrape job data using a Python script and save it to Google Sheets.

- Use Zapier to trigger a workflow every time new data is added.

- Automatically populate forms using the scraped data.

2. Custom Python Scripts

For more complex workflows, Python provides unmatched flexibility. A single Python script can:

- Scrape data from multiple websites.

- Fill out forms based on scraped data.

- Run tests to ensure accuracy.

- Notify you of completion via email or chat (using libraries like smtplib or Twilio).

Example: End-to-End Workflow

def main():

# Step 1: Scrape data

data = scrape_jobs("https://example.com/jobs")

# Step 2: Fill out forms

for job in data:

fill_form(job)

# Step 3: Test workflow

assert test_form_submission(), "Workflow failed!"

# Notify success

print("Workflow completed successfully!")

main()

This approach allows you to customize every aspect of the automation, making it highly adaptable to different tasks.

Why Automate Workflows with Python?

Integrating web scraping, form filling, and testing into workflows not only saves time but also ensures:

- Accuracy: Reduces human errors.

- Scalability: Can handle large amounts of data.

- Efficiency: Streamlines repetitive tasks.

Python’s extensive ecosystem of libraries makes it ideal for tackling these challenges, enabling developers to automate even the most complex workflows effortlessly.

Keywords Recap: automate tasks with Python, repetitive task automation Python, simplify workflows with Python, Python testing and web scraping, Python scripts for browser automation.

Why Choose Prateeksha Web Design?

At Prateeksha Web Design, we specialize in creating custom web solutions that integrate seamlessly with automation tools. Whether you need a platform optimized for web scraping or a form-filling workflow, our team of experts ensures that your business stays ahead of the curve.

Here’s why we stand out:

- Expertise in Python-based solutions for form filling and testing.

- Tailored approaches for businesses of all sizes.

- Ongoing support to keep your automation tools running smoothly.

Conclusion

Python is a game-changer for automating repetitive tasks like form filling, web scraping, and testing. With its extensive libraries and simple syntax, Python empowers program geeks and beginners alike to create powerful automation scripts.

If you're looking to level up your workflows or need help setting up automation for your business, Prateeksha Web Design is here to help. Let’s simplify your tasks and boost productivity with Python!

Keywords Recap: programgeeks, programming geeks, Python form filling automation, web scraping with Python scripts, Python automated testing, automate tasks with Python, repetitive task automation Python.

Interested in learning more? Contact us today. For further exploration, check out Automate.io for more automation inspiration.

FAQs

FAQs

-

Why should I use Python for automation tasks? Python's easy-to-learn syntax, extensive libraries like Selenium and Beautiful Soup, community support, and versatility make it an ideal choice for automating repetitive tasks such as form filling, web scraping, and software testing.

-

How can I get started with form filling automation using Python? You can start by installing the Selenium library with

pip install selenium, downloading the appropriate WebDriver for your browser, and writing a script that uses Selenium to interact with forms on the web. -

What are the best practices for web scraping with Python? Always respect the

robots.txtfile of the website you are scraping, limit the frequency of your requests to avoid getting blocked, and ensure your scraping activities comply with the site's terms of service. -

Can I automate testing with Python? If so, how? Yes, you can automate testing using libraries like PyTest and unittest. Start by installing PyTest with

pip install pytest, then write test cases to verify the functionality of your code or application. -

How can I integrate multiple automation tasks into a single workflow? You can combine web scraping to collect data, use that data to fill out forms automatically, and then validate the entire process with automated tests. This can be done using Python scripts that link these tasks seamlessly for greater efficiency.